Story & Context

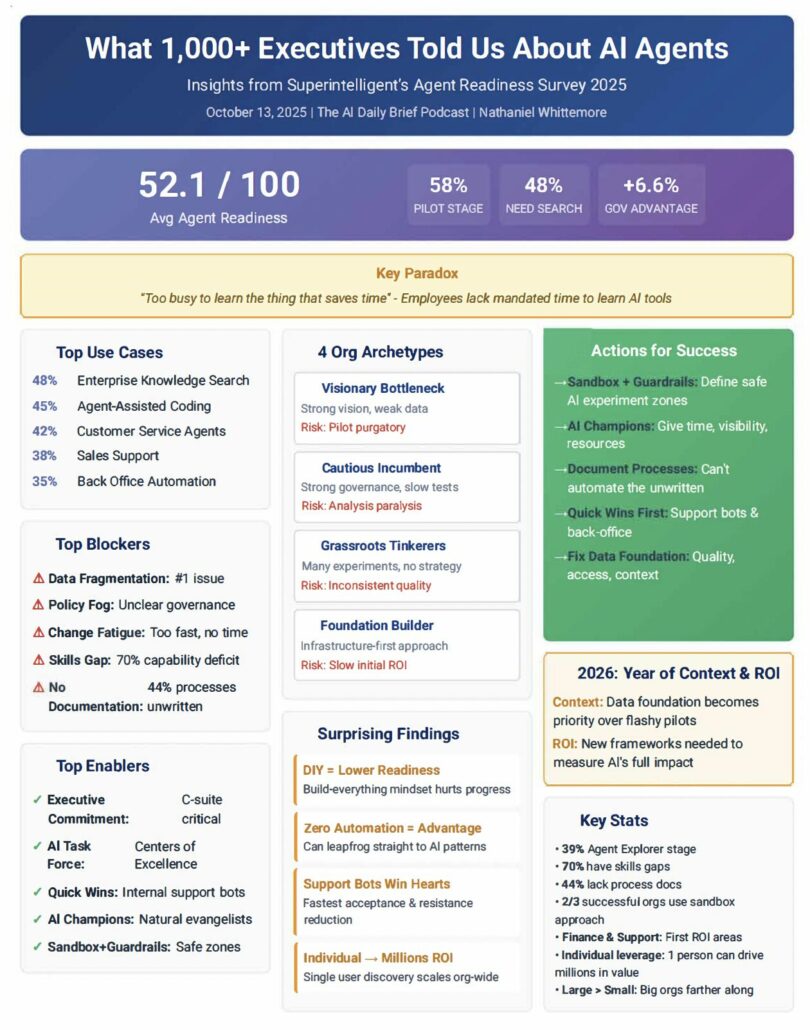

Over the past year I’ve had a front‑row seat to the weird world of pilot purgatory. In workshops, board meetings and random coffee chats in Finland, the same theme kept emerging: “We’re doing so many AI pilots… so why doesn’t anything change?” New research backs up those anecdotes. Superintelligent’s agent‑readiness study found that the average readiness score is just 52.1/100, and 58% of organizations remain stuck in the pilot stage. Seven in ten executives say their workforce has skills gaps and 44% admit their core processes aren’t even documented. In other words, most companies have dipped a toe into AI but haven’t built a bridge to the other side.

That gap shows up in sobering ways. A BCG survey AI at Work 2025 report make it clear that frontline employees aren’t empowered: only 36% feel they’ve been trained on the skills needed for AI transformation, and more than a third say they don’t have access to the right tools. When corporate solutions fall short, 54% say they would use unauthorised AI tools. Even more striking, only a quarter of frontline employees receive clear support from leadership on how and when to use AI. That disconnect breeds shadow AI and cynicism.

There’s another uncomfortable statistic that has been bouncing around conference halls: 95% of generative‑AI pilots never make it to production. An MIT study described in a 2025 Forbes piece notes that only 5% of custom GenAI tools survive the pilot‑to‑production cliff, while the rest remain brittle demo projects with no lasting value. Those same researchers argue that friction isn’t failure, it’s the crucible where systems learn and workflows get redesigned. If you avoid resistance, your pilots stall.

These numbers resonate because they mirror what I see every day. I’ve helped clients build internal support bots that save hours, only to watch the prototypes gather dust when the champion moves on. I’ve sat with managers who genuinely believe in AI yet can’t spare their teams three hours a week to learn. It isn’t malice or incompetence; it’s a mismatch between ambition and execution.

Insights: Four Levers That Separate Scalers from Stuck Pilots

1. Lead by example (not by memo)

BCG’s slides tell a blunt story: only 25% of frontline workers say they’ve received enough guidance from their leaders. But in teams with clear leadership support, 82% become regular AI users, compared with 41% where support is weak. The share of employees who feel very positive about GenAI’s impact on their career jumps from 15% to 55% when leaders model the way. Leadership isn’t about sending a PDF on responsible AI; it’s about opening your laptop in front of your team and saying, “Here’s how I’m using AI to plan my week, analyse reports or write strategy drafts.” In my workshops, the moment a senior manager starts prompting ChatGPT or Gemini live, everyone’s shoulders drop. Learning becomes permission rather than pressure.

2. Build a sandbox with guardrails

Many organisations talk about governance; few actually build it. When policies are fuzzy, people either avoid AI entirely or sneak around with personal accounts. Superintelligent’s research found that data fragmentation remains the number‑one blocker, and nearly half of companies lack documented workflows. Clear guardrails matter: firms with explicit AI policies and a safe “sandbox” are 6.6% more agent‑ready on average. That margin is the difference between experimentation and chaos. A sandbox doesn’t mean locking everything down; it means defining which data sets are okay to play with, which tools are approved, and how to request exceptions. When rules are transparent, you reduce shadow AI and the legal headaches that come with it.

3. Invest in data and context

You can’t scale what you can’t see. The top blocker across all the executive interviews I’ve read is data fragmentation and poor context. A support bot can’t answer questions if your CRM, ticketing system and wiki don’t talk to each other. Even slick AI tools fail without a connected data foundation. In fact, 70% of organisations report workforce skills gaps and 44% say they haven’t documented their core processes. That means there’s nothing for an agent to automate. The unsexy work of unifying data, documenting processes and cleaning up schemas is what turns AI into ROI. As one executive said in a recent discussion, “The year of context will become the year of ROI.”

4. Think big, start small, scale fast

The most successful companies treat every pilot as a seed, not a showcase. The MIT research highlights that 95% of pilots fail because they remain generic or disconnected. The 5% that succeed embed AI into high‑value workflows, integrate deeply and design for friction. They document what worked, share the playbook and then scale it to other teams. Often the first big win comes from something small, an internal QA agent that answers common questions or an automated reporting task that frees up hours. The goal is not to run ten pilots; it’s to take one pilot across ten departments.

Framework: Turning Experiments into Systems

Here’s a simple framework I use when advising clients who want to move from pilots to real, measurable impact:

- Schedule time and model the behaviour. Carve out three to five hours a week for your teams to experiment with AI tools. BCG’s research shows that only 36% of employees feel properly trained, yet 79% of those who receive more than five hours of training become regular users, compared with 67% of those with less than five hours. Use that time to run live demos and share your own prompts. Learning is contagious when leaders go first.

- Create a governance sandbox. Publish a living policy that lists approved tools, permitted data sets and escalation paths. Encourage experimentation within those boundaries. Organisations with clear policies and guardrails achieve higher readiness and reduce shadow AI. Make it easy to request new tools; don’t force employees to choose between progress and compliance.

- Fix the data foundation. Start with one “safe” dataset—anonymised support tickets or past project documentation—and make it accessible through an approved tool. Unify high‑value domains and document the workflows around them. Remember that fragmented data and undocumented processes are the biggest barriers. Without context, even the best model is blind.

- Scale one pilot at a time. Choose a pilot with clear business value—like automating invoice processing or summarising customer conversations—and measure its impact. Share the results widely and turn the lessons into a template. Resist the urge to launch dozens of proofs of concept. As the MIT study shows, success comes from embedding AI deeply into workflows and embracing friction.

Adopting AI isn’t about chasing hype; it’s about redesigning work so that people and machines complement each other. The companies that succeed in 2026 will be the ones that build context, codify governance, invest in their people and treat each pilot as a blueprint for scale.

Closing Thought

After 2025, I’ve seen enough demos to last a lifetime. Tools are proliferating faster than we can name them, but value comes from context, leadership and follow‑through. If next year is to be the year of leadership and ROI, we have to turn experiments into systems. What will it take for your organisation to move from pilots to real, measurable impact in 2026?