Over the past decade I’ve helped teams in Finland and abroad build mobile apps, backend systems and, lately, AI‑powered products. When I’m called in to troubleshoot an AI initiative that’s stalled, the root cause is rarely the model or the code. It’s the people behind it, the data they’re allowed to touch, and whether leadership is willing to roll up their sleeves. A recent BCG survey found that only 36 % of employees feel adequately trained to use AI . Worse, more than half of respondents (52 %) say poor data quality and availability are the biggest obstacles to adoption . If the people aren’t prepared and the data isn’t connected, even the slickest model will flop.

I’ve trained hundreds of developers, designers and managers across industries, from healthcare to manufacturing. The pattern is depressingly consistent: leaders exhort their people to “use AI more,” yet most employees lack the time, knowledge and governance to do so. In Helsinki, I’ve seen talented engineers circumvent company policy by using personal ChatGPT accounts because the approved tools were too restrictive. That isn’t rebellion, it’s desperation.

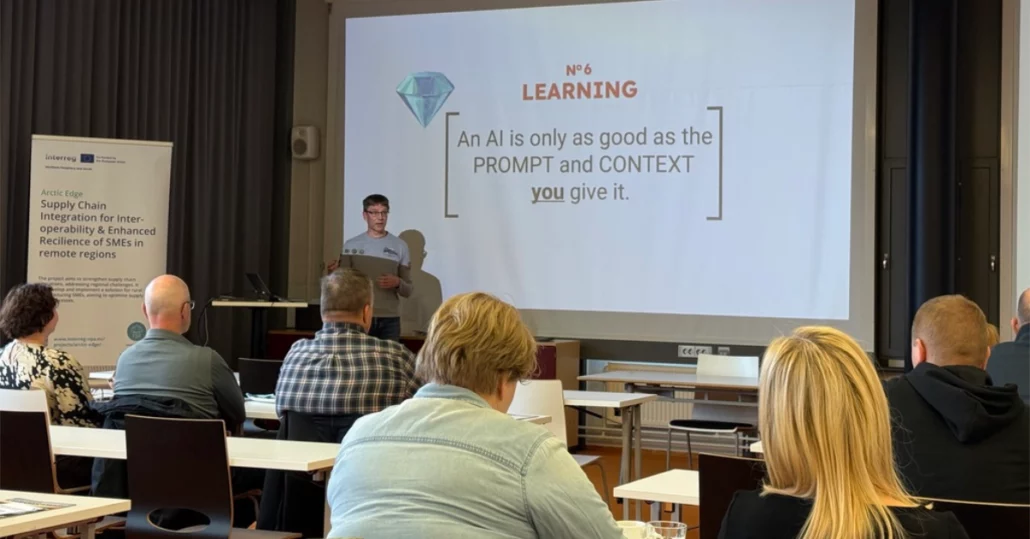

What I’ve Learned from Training Hundreds of Professionals

1. Time to learn is more precious than tech

During workshops, I often ask participants how many hours their employer has set aside for AI training. The answer is usually zero. BCG’s 2025 AI at Work report shows that regular AI usage skyrockets among employees who receive at least five hours of training and have access to in‑person coaching. Conversely, those who receive no training barely use AI at all. It’s not resistance; it’s busyness.

When people are given a few hours every week to experiment, they quickly discover practical uses: summarising meeting notes, drafting customer emails or generating test data. The problem is that many leaders are reluctant to take employees off billable work for training. In my experience, blocking even three hours a week for AI practice pays for itself within a month, because people start automating repetitive tasks. Without that breathing room, AI feels like yet another item on an overflowing to‑do list.

2. Disconnected data blinds AI

Fancy algorithms can’t see through silo walls. CMSWire notes that AI underperforms when customer or operational data remains siloed, fragmented or inaccessible. The same 2025 report found that 28% of businesses cite data silos and integration challenges as a top obstacle—nearly as significant as budget constraints . In my own projects, I’ve encountered companies with three different CRM systems and half a dozen ERP instances. Engineers end up copying CSV files by hand because the systems don’t talk to each other.

There’s no magic bullet, but starting small helps. Pick one “safe” dataset—say, anonymised support tickets—and make it accessible through an approved AI tool. Once teams see value, their appetite for sharing grows. Remember that more than half of surveyed professionals (52%) cite data quality and availability as the biggest adoption challenge ; ignoring this will doom any pilot.

3. Policy gaps create “shadow AI”

When governance is unclear, people either don’t use AI at all or they use it secretly. According to BCG, over half of employees (54%) say they would use AI tools even if the company hadn’t authorized them . In GenZ and Millennials, that jumps to 62%, while only 43% of other employees would wait. I’ve witnessed this first hand: developers feeding proprietary code into a personal chatbot because the sanctioned tools felt clunky. Shadow usage isn’t malicious; it’s a response to slow approvals and vague policies.

To fix this, leaders must build and communicate clear AI governance, not just publish a PDF on the intranet. Offer the best possible tools internally and define what data can be used. If people feel the corporate environment slows them down, they’ll go around it. Crucially, governance should empower experimentation while protecting sensitive data.

4. Pilot paralysis is real

Many companies run enthusiastic proof‑of‑concepts that never scale. A 2025 MIT NANDA initiative study found that 95% of enterprise AI pilots fail to deliver measurable financial returns. The issue isn’t the models—it’s the absence of organisational readiness, clear success metrics and consistent data. I’ve seen promising prototypes abandoned because the champion moved on or because there was no plan to integrate the model into production workflows.

The antidote is simple but hard: take one successful pilot and make it a standard. Document the process, share the story and measure the ROI. When people see a real example of time saved or revenue generated, scepticism turns into excitement.

Turning Lessons into a Practical Framework

Here’s how I approach AI readiness with clients, whether it’s a startup in Finland or a global enterprise:

- Schedule learning, don’t just encourage it. Carve out at least three hours per week for teams to experiment with AI tools. Make it part of working hours, not an after‑hours chore. As BCG’s data shows, even five hours of structured training doubles the likelihood of regular AI usage .

- Connect data one dataset at a time. Start by making a non‑sensitive dataset accessible through an approved AI platform. Use this to demonstrate value and build trust. Remember that data silos are cited as a top obstacle by nearly a third of organisations .

- Publish a living AI policy. Define what tools are approved, what data can be used and how to request new tools. Be transparent about how prompts and outputs are logged. More than half of employees will bypass restrictions if the rules aren’t clear , so clarity reduces risk.

- Scale one pilot. Choose a pilot with clear business value—like automating invoice processing or customer support. Measure time saved, quality improvements and employee satisfaction. Share the results widely to inspire others. Avoid running ten proof‑of‑concepts simultaneously; focus and finish.

- Invest in leadership training. Leaders set the tone. BCG reports that only a quarter of frontline employees receive strong AI guidance from their leaders . When leaders model AI use, employees are more positive about its impact on their career and job enjoyment.

Closing Thoughts

As 2025 draws to a close, I’m hopeful that 2026 becomes the year of AI leadership and real ROI. We know what to fix: give people time to learn, connect the data, build policies that encourage responsible experimentation and scale the pilots that work. The rest is execution.